Did you know that by 2020 it is expected that up to 65% of all enterprises will be using IoT devices? It is becoming increasingly obvious as well that the IoT and the cloud have an inseparable Romeo and Juliet type relationship, however, only about a third of the data collected by sensors worldwide are analyzed at the source. For the past decade or so, cloud computing has dominated the IoT industry and has been used by enterprises for all IoT components. Pushing workloads to remote servers has become the industry standard for almost all data processing and storage. This has created many challenges, but in particular, in areas such as latency, network bandwidth, reliability, security, and more. To overcome these challenges, new forms of computing have been created to extend cloud computing to the edge of an enterprises’ network. Two of these forms of computing include fog and mist computing.

Therefore, in this blog post we will talk about cloud, fog, and mist computing. We will define these buzz words and their uses cases, then we will provide an example of each. Along the way we will also discuss how each computing model came to exist.

Cloud Computing

Cloud computing is the delivery of on-demand computing services – from applications to storage and processing power – typically over the internet.

Rather than a company owning their own computing infrastructure or data centers, companies can rent access to anything from applications to storage from a cloud service provider. Cloud computing can be used for an unreal amount of services such as consumer services (Gmail) and software vendors who are now starting to offer their applications as services over the internet using a subscription model rather than stand-alone products.

Cloud computing has its many benefits:

· Using cloud services means that companies do not have to buy or maintain their own computing infrastructures.

· Using cloud services means that companies can move faster on projects and test out concepts without lengthy IT procurement and big upfront costs because firms only pay for the resources they consume.

· For a company with an application that has big peaks in usage, for example, an application that is only used once a week, it makes financial sense to have it hosted in the cloud instead of having the dedicated hardware and software laying idle for a long time.

However, cloud computing does not come without its limitations:

· Some companies might be reluctant to put critical data out in a cloud service provider which is also used by a rival as this takes away any competitive advantage you might have had before the data was set to the cloud.

· Unsuitable for applications that need data to be analysed in real time because you need to send the data from the node all the way up to cloud.

· Communication eats up a lot of power which makes sending data up to the cloud quite a power hungry task.

One example of a cloud service is the Amazon Web Services IoT Platform. Amazon dominates the cloud service and have an enormous variety of tools and features available on their cloud platform.

Its an extremely scalable platform which can support billions of devices and trillions of interactions between them. They offer a software development kit to help the developer build applications to run on AWS and charge people per million messages sent between a device and the server.

As mentioned previously, mainly relying on cloud computing as we have done for the past decade creates many challenges such as with high latency, high network bandwidth, poor reliability, poor security, and more. This is one of the many reasons why Fog Computing came about.

Fog Computing

Fog computing was first created by Cisco with a goal to extend cloud computing to the edge of a company’s network. Fog computing is the concept of a network infrastructure that stretches from the outer edges of where data is created to where it will eventually be stored, whether it be in the cloud or in a customer’s data center. Mung Chiang, one of United States’ lead researchers on fog and edge computing gave quite a good definition of fog computing:

“Fog provides the missing link for what data needs to be pushed to the cloud, and what can be analyzed locally, at the edge.”

A fog computing infrastructure can have a variety of different components and functions, for example, gateways which provide access to edge nodes, wired and wireless routers, and global cloud services.

There are many benefits to fog computing:

· It gives organizations more choices for processing data wherever it is most appropriate to do so, thus producing better insights once the data is analysed and allowing for more real time actions to be taken on analysed data.

· Handling more data at the edge, and only sending what is really needed to the cloud reduces the amount of data you have in the cloud therefore improving your security.

· Fog computing can also create low latency network connections between devices and analytics end points. This reduces the amount of bandwidth required compared to if the data had to be sent back to the cloud or the or data center for processing.

An example of a use case for Fog computing is in Smart cities where utility systems are increasingly using real-time data to more efficiently run systems. Sometimes this data is in a remote place, so processing this data close to where it was created is essential. Fog computing is ideal in a case like this.

Essentially, there are hundreds of applications where real time data analytics needs to happen in order to respond to these incidents as quickly and efficiently as possible such as banks who use real-time data analytics to monitor fraud, or self-driving cars, where the car needs to be able to process what is happening around it instantly to avoid an accident.

As fascinating as Fog computing sounds, some applications require ultra-low latency which has made people look for other options for computing power, and this computing model is known as Mist computing.

Mist Computing

Mist computing is the extreme edge of a network, typically consisting of micro-controllers and sensors. Mist computing uses microcomputers and microcontrollers to feed into fog computing nodes and potentially onward towards the centralised (cloud) computing services.

The 2 main goals of Mist computing include:

· Enabling resource harvesting by computation and communication capabilities available on the sensor itself.

· Allowing arbitrary computations to be provisioned, deployed, managed, and monitored on the sensor itself.

There are many benefits to Mist Computing as well. Communication takes 5x the power of computing in an embedded microcontroller, so, by collecting raw data at the edge of your network, then grouping it using filtering, anomaly identification mechanisms, and pattern recognition, you are able to send only essential data up to the gateway, router, or server, which in turn, conserves battery power as well as bandwidth. Mist computing is a great fit for low power situations where extending battery life is a core concern.

Mist computing allows for the following features:

· Local analytics and decision-making data.

· Highly robust data and applications.

· Data access control mechanisms to enforce privacy consent at a local level.

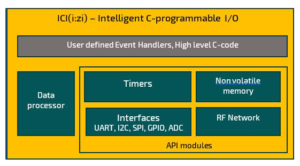

One example of Mist Computing is in Radiocrafts’ own Intelligent C-Programmable I/O platform, or as we like to call it, ICI. ICI is a tool to allow users to access the on-board resources on the RF module such as the processing engine, the digital and RF interface, timers and memory. It enables network designers to create their own application using high-level C code.

One of the use cases for ICI is in Mist computing to reduce bandwidth requirements and for fast responses to local events. The Radiocrafts modules supported by ICI are over-the-air upgradable. This means that the user can upgrade his/her user defined ICI firmware when the network is deployed and in full operation, so new sensors/actuators can be included when the need occurs. Almost any application can be created in 100 lines of code or less.

The high-level abstraction layer in the ICI allows designers with no understanding of a real time operating system — or knowledge of the chip-set architecture — to create an intelligent network node. For example, intelligent node coding can include:

- Initiating transmit to base-station based on local triggers

- Interfacing with any sensor or actuator, including complex bus interfaces

- Initiating complex event-driven control and/or transmit functions

- Processing of local signals

- Supporting advanced security measures

- Logging local data

- Creating local alarms

- Supporting an advanced RF protocol

Creating your own application has never been so easy with ICI.

Radiocrafts new product lines, RIIoT™ and RIIM™ support ICI.

- To learn about Radiocrafts’ ICI please click here.

- To learn more about RIIoT™ please click here.

- To learn more about RIIM™ please click here.

What to take away from this?

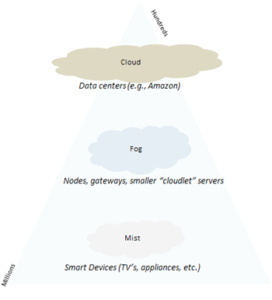

What you should take away from this blog post is that there are various different kinds of computing models such as cloud, fog, and mist computing. All these computing models have their own advantages and disadvantages, which makes them suitable for specific applications. For example, Mist and Fog computing are great for real time data analytics as companies can now analyse their data locally from the sensor itself, or from the gateway/router, instead of having to send the data all the way up to the cloud first. Another important thing to understand is how these different computing models relate to one another. In computing, we can envision Amazon as the thick cloud layer. Beneath that lies the fog, which consist of cloud-lets such as gateways and routers. These support the cloud, but are not part of the massive cloud server structure. Beneath the fog, we have have the mist, which is made up of smart devices and sensor networks, this area is what we refer to as the edge.

So, what is your opinion on the three different computing models? What other computing models do you think are important for the future of IoT? Do you disagree with any of the statements we have made? Use the comment section below to start a discussion, ask questions, or just to give feedback and say hi. Radiocrafts or another blog reader will answer!